Evaluating in Complexity: A Personal Journey Through Systems Change

This week’s blog post was written by Katie Cramphorn, Research and Development Lead for the Healthy Relationships Partnership. Katie’s work looks at telling the story of systems change in Hartlepool through research and evaluation. Katie is also completing a Masters via research focusing on delivering effective early intervention with families.

I started working as a Social Data Analyst for the Healthy Relationships Partnership when the project began in 2015. I was initially hired to look at the impact of various interventions with parents and colleagues we worked with. At the time our project evaluation was focused on evidencing the need for system change in the way we work with parental relationships. The work began to evolve rapidly and it became clear that we needed to know more about the context of the system in which we work in in order to bring our data to life. We needed stories!

(image source: http://www.weblogcartoons.com/2006/06/23/your-shoes/)

I started to gather those stories of families experiences and stories which illustrated the culture and practices of the local workforce. For me as a Data Analyst who usually works with numbers not people this was a daunting prospect! I felt confident talking about evaluation and helping people think about what needed to be done to demonstrate impact but when it came to talking to people about their experiences I found myself suffering from imposter syndrome. I worried that when speaking to others who had experiences unfamiliar to me that I’d find myself in difficult situations when they realised I couldn’t relate. It took a while for me to realise that often it’s beneficial not to share experience with the person taking part in your research. It means you can ask genuinely curious questions and not contaminate this with your understanding of their experience.

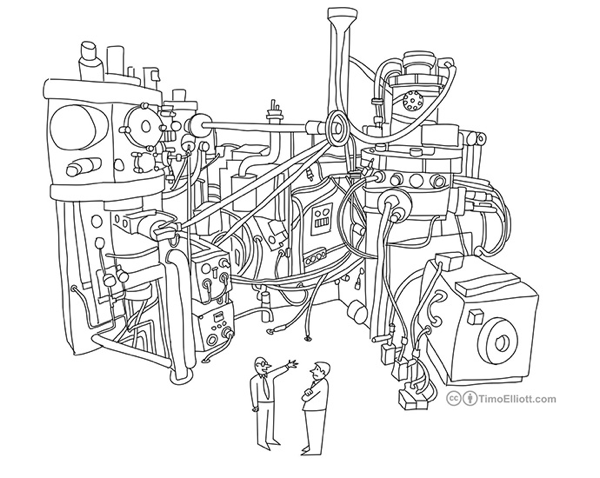

After getting more research experience under my belt, with both parents and professionals, I began to understand more clearly the enormity of evaluating systems change work. Evaluating our interventions and training was straight forward. There’s a Point A, a Point B and an outcome, evaluated based on our defined criteria of what we would consider to be a success. With Systems Change it’s looking at a web rather than an A to B journey. We are aware of how difficult it is to ‘measure’ a system that is truly and effectively changing as a result of our own intervention. We have no way of knowing how many of the 20 practitioners who complete a training session went on to talk about our work and how many people benefitted as a result. I had to come to terms with the fact that truly changing the way people do things means it’s highly likely that they’ll go off and think or do things differently and they probably won’t call us up to brag about it! This is great in terms of changing practice but not an easy thing to deal with from a research and evaluation perspective and means we have to be more creative in terms of showing examples of how our intervention has worked.

Over the past 4 years my most valuable bit of learning has been around the importance of having professional curiosity and having a genuine interest in the experiences of people in this area of work. Aiming to understand whether someone is on the same page and then building on where they tell us they’re coming from has shown itself to be massively important in our development. Asking people “When you say that, what do you mean by…” and “When you say you do that, what does that look like?” have opened the door to a lot of interesting conversations and valuable learning that we couldn’t have gained if we had made assumptions based on job roles or other criteria.

It’s important not to make assumptions that may influence our learning and evaluation. There are always challenges to evaluating when you’re on the ‘inside’ of a system and you have to be aware that your position can come with bias. There have been occasions where I’ve found myself questioning how I can evaluate and shed light on something that I myself am helping to develop. I think the culture of our team helps counteract this. Our focus is on creating a learning culture rather than using evaluation to be critical of anyone or anything. We want to use our evaluation to hold a mirror up to the system so that those involved can make the changes to create a system better able to support parental relationships. We’re also really reflective about what we’re doing and why we’re doing it. This makes it really easy to be as honest and unbiased as possible when we carry out evaluation and we’re able to reflect that even our own team could have done some things differently in hindsight. It’s all about the learning! Now I see my position as helping make sense of things from within a change team and spreading that learning as far and wide as we can so that those with an interest in supporting parental relationships, or systems change more generally, can learn from what worked for us and what didn’t.

My role has now developed to be the Research and Development Lead for the project and from an evaluation stand point I think we’re in our most exciting stage of the project so far, telling our story of systems change in Hartlepool and establishing the legacy of our work for strengthening parental relationships. My work right now and moving forward is looking at narrative to illustrate systems change and how it can be sustained. I’m looking forward to carrying out further research locally and even thinking about more creative ways we can tell our story to appeal to more diverse audiences. The project has been an amazing opportunity to show how research can inform working with families and developing practice, going forward I think we have a lot to share about our rollercoaster systems change journey!